To improve entrepreneurial outcomes and hold innovators accountable, we need to focus on the boring stuff: how to measure progress, how to set up milestones, and how to prioritize work. This requires a new kind of accounting designed for startups—and the people who hold them accountable.

—Eric Ries, The Lean Startup [1]

Applied Innovation Accounting in SAFe

by Joe Vallone, Principal Consultant, SPCT/SAFe Fellow

[scd_58689 title=”extended-guidance”]Introduction

Developing innovative world-class solutions is an inherently risky and uncertain process. But this level of uncertainty causes some enterprises to avoid taking the right risks, and when they do, it increases the likelihood they will spend too much time and money building the wrong thing, based on flawed data or invalid assumptions. As Eric Ries observed, “What if we found ourselves building something that nobody wanted? In that case, what did it matter if we did it on time and on budget?”[2]

Unfortunately, traditional financial and accounting metrics have not evolved to address the need to support investments in innovation and business agility. As a result, organizations often use lagging financial indicators such as profit and loss (P&L) and return on investment (ROI) to measure the progress of their technology investments. While these are useful rear-view mirror business measures, these results occur far too late in the solution lifecycle to inform the actual solution development. Even NPV (Net Present Value) and IRR (Internal Rate of Return), though more forward-looking, is based on estimating the unknowable future cash returns and speculative assumptions of investment costs and discount rates. Moreover, even when used in conjunction with a sensitivity analysis (i.e. “what if” calculations), these financial metrics neither reflect, nor inform the learning obtained from incremental product development. Thus, these traditional metrics aren’t helpful in our move to iterative, incremental delivery and business agility.

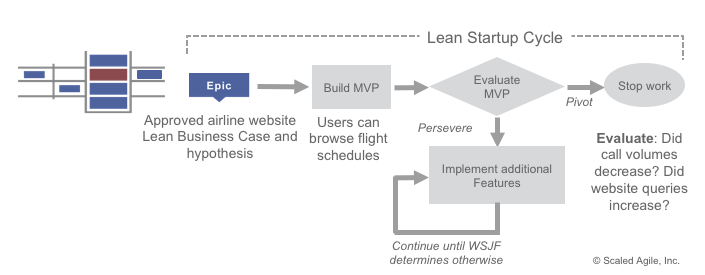

We need a better plan —a different kind of economic framework—one that quickly validates product assumptions and increases learning. In SAFe, this is reflected, in part, by applying the Lean Startup Cycle (see Figure 1), a highly iterative cycle that provides the opportunity to quickly evaluate large initiatives and measure viability using a different kind of financial measure: Innovation Accounting.

What is Innovation Accounting?

Innovation Accounting is a term coined by Eric Ries’ The Lean Startup. The Innovation Accounting framework consists of 3 learning milestones:

- Minimum Viable Product (MVP) – establish a baseline to test assumptions and gather objective data.

- Tune the Engine – quickly adjust and move towards the goal, based on the data gathered.

- Pivot or persevere – Decide to deliver additional value, or move on to something more valuable, based on the validated learning. (Lean thinking defines value as providing benefit to the customer; anything else is waste.)[2]

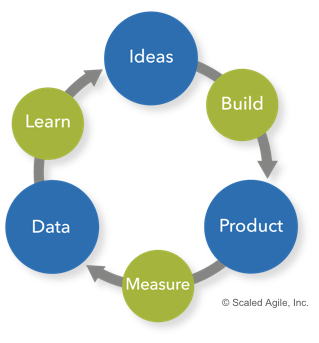

To validate learning and reduce waste, a fast feedback loop is essential, also referred to as ‘build-measure-learn,’ as illustrated in Figure 2. Applying the learning obtained from real customer feedback results in increased predictability, decreased waste, and improved shareholder value.

Leading Indicators versus Vanity Metrics

Innovation Accounting asks us to consider two questions: 1) Are we making progress towards our outcome hypothesis? 2) How do we know? In The Lean Startup this is known as a “leap of faith assumption”; one that requires that we understand and validate our value and growth hypothesis before we move further forward with execution. This becomes a basic part of the economic framework that drives effective solution development.

To answer these questions, and make better economic decisions, we support innovation accounting by using leading indicators, actionable metrics focused on measuring specific early outcomes using objective data. Leading indicators are designed to harvest the results of development and deployment of a Minimum Viable Product (MVP). These indicators may include non-standard financial metrics such as active users, hours on a website, revenue per user, net promoter score, and more.

It’s important to be aware of vanity metrics, which are indicators that do not truly measure the potential success or failure of the real value of an initiative. While they may be easy to collect and manipulate, they do not necessarily provide insights on how the customer will use the product or service. Measures such as the number of registered users, number of raw page views, number of downloads may provide some useful information or make us feel good about our development efforts, but they may be insufficient to provide the evidence necessary to decide if we should pivot or persevere with the initiative’s MVP.

There are some practical ways we can avoid being deceived by vanity metrics and instead work to evaluate our hypothesis. A/B testing or split-tests enable us to validate our outcome hypothesis using actionable data. For example, group A may get the new feature and group B does not. By establishing a control group, we can evaluate the results against our hypothesis and make decisions as part of our feedback loop. We can also avoid vanity metrics by focusing on customer-driven data. We can use cohort analysis to examine the use of a new product, service, feature, etc. over time as it pertains to a cohort (group). For example, suppose we wanted to see how a new feature on our website was improving the conversion rate to paying customers. We could look at new registrations per week (i.e. cohort) and report on the percentage of conversion to paying customers. We can analyze this information on a weekly basis and see if the conversion rate remains constant for each cohort (the group of registered users). If it does, we have a clear indication of how the feature is affecting the conversion rate. If it does not remain constant, then we have a chance to tune the engine or pivot.

Applying Innovation Accounting in SAFe

Measuring Epics

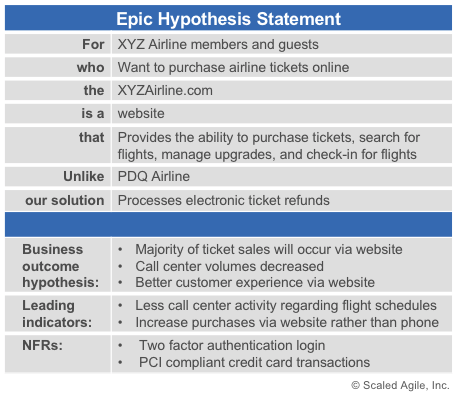

The implementation of large, future-looking initiatives is an opportunity for organizations to reduce waste and improve economic outcomes. In SAFe, large initiatives are represented as Epics and are captured using an epic hypothesis statement. This tool defines the initiative, its expected benefit outcomes, and the leading indicators used to validate progress toward its hypothesis.

Example of Airline Website Epic

For example, consider an airline that wants to develop a website for purchasing tickets. This is a significant endeavor that will consume a considerable amount of time and money. Before attempting to design and build the entire initiative, the epic hypothesis statement template should be used to develop a hypothesis, test assumptions, and gain knowledge regarding the expected outcome (see Figure 3).

We might hypothesize that the website will help reduce call center volume and ultimately reduce costs to the airline, resulting in better profit margins per ticket sold. Thus, one assumption we are making is that the website will be faster and easier than a phone call to the customer service department.

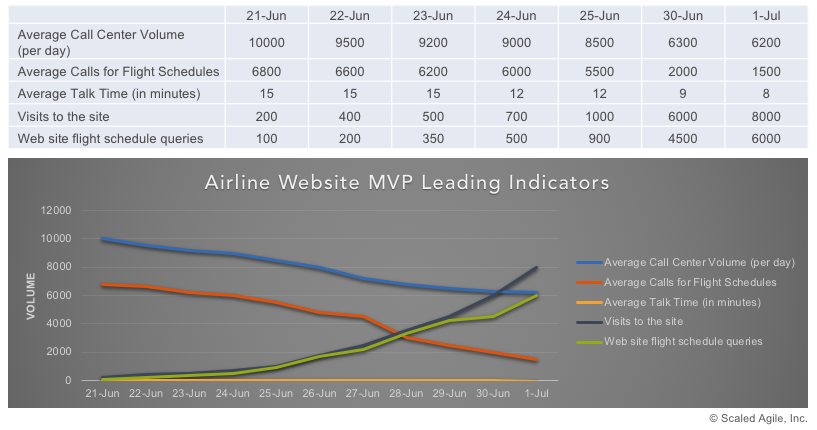

To test that hypothesis, we could release an incremental feature or set of features, i.e., an MVP that allows customers to research flight schedules. To validate and measure the efficacy of the feature(s), we could analyze the call volume as well as the types of questions the help desk received. Then we could quickly compare the trends of inquiries on the website vs. those at the call center. Additionally, using good DevOps practices, we could build telemetry into the feature and analyze customer interaction. The information captured in Figure 4 shows that call center activity has decreased and use of the website has increased. These leading indicators demonstrate that the MVP appears to validate our hypothesis.

Leading indicators can provide immediate feedback on usage patterns. By analyzing the objective data, we can test our hypothesis and decide to continue releasing additional features, tune the engine, or pivot to something else. Thus, the Epic’s MVP and the leading indicators enable us to make faster economic decisions based on facts and findings. It is interesting to note that in figure 4, the visits to the site metric by itself might be considered a vanity metric. On its own, this metric doesn’t tell us much about the success of our MVP or the viability of the Epic. However, placed in context with the other metrics, it gives us an indication of where customers are spending their time when they visit the website.

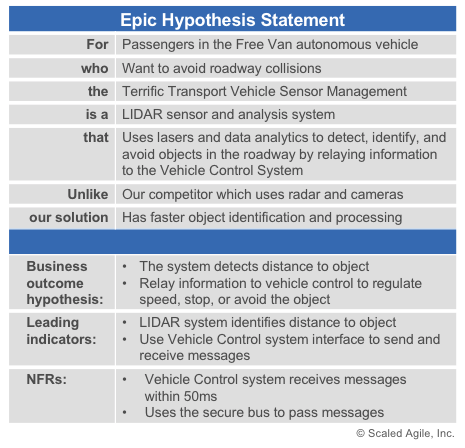

Example of Autonomous Vehicle Epic

With their direct connection to large numbers of users and their interactions, as well as the ability to quickly evolve the UI, websites are a convenient place to consider how to apply Innovation Accounting. However, Innovation Accounting has far broader applicability than that. Let’s consider a different example, an Epic which describes the sensor system of a new autonomous vehicle.

Our epic hypothesis (see Figure 5) is that the sensor system will quickly detect and help us to avoid collisions with objects. Our assumption is that the information will be provided fast enough for the vehicle to stop or take evasive action (as that is the entire point!).

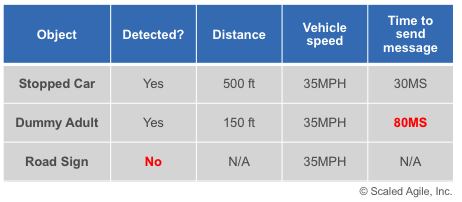

To test the hypothesis, we would like to find out if the sensor system can detect objects and if it is indeed fast enough for our purposes before we build the entire system. We could build a single sensor and basic data capture software to validate the distance between the object and speed of vehicle control system interface. As an MVP, suppose we mounted the sensor on the front of a car and connected it to a laptop within the vehicle. Next, we place several objects on a test track and drive the vehicle toward them. We could use the software to record information from the sensor as our leading indicator. We could also use software to measure how long it takes for the message to be generated and sent to the vehicle control system interface by using a mocking framework instead of waiting for the vehicle control system to be built. This would give us an early indication of compliance with our NFR. Figure 6 describes the leading indicators for this cyber-physical system.

Based on the data generated during the MVP testing, we have some more questions to answer before we move forward with additional features in the Epic. Perhaps we can tune the engine and see if we can decrease the message sending time. Why didn’t the sensor detect the road sign? Would going slower have helped for the initial tests? What happens if we place the sensor on the roof of the car? Based on the answer to these and other questions, we may need to pivot to a different technology.

‘Failing fast’ is an Agile value. The implication is that failure in small batch sizes is acceptable, as long as we learn from it. Thus, validated learning becomes the primary objective of testing the hypothesis. As previously mentioned, SAFe uses the Lean Startup Cycle to iteratively evaluate the MVP of Epics and to pivot (change direction, not strategy), or persevere (keep moving forward) decision against the outcomes hypothesis. This is done incrementally by developing and evaluating features from the Epic. The empirical metric we use to prioritize the features is Weighted Shortest Job First (WSJF). Using WSJF, we can rapidly evaluate the economic value of the feature and the overall progress of the Epic towards its Business Outcome. This allows us to quickly and iteratively make a pivot-or-persevere decision based on objective data.

The decision to persevere indicates there is still additional economic benefit. Leading indicators validate our hypothesis and MVP, resulting in the development and release of additional features. The decision to pivot may occur when sufficient value has been delivered, or on learning that our hypothesis was incorrect. Deciding to pivot is so difficult that many companies fail to do it.[2] Often companies will continue to invest in an initiative despite (or lack of) data to the contrary. We have the opportunity to reduce waste of money and time, by utilizing fast feedback loops and leading indicators to avoid working on features that customers don’t want or need.

It’s important to note that Innovation Accounting, as applied to SAFe, does not consider sunk cost (i.e., money already invested). To pivot without mercy or guilt, we must ignore sunk cost, as discussed in SAFe Principle #1, Take an economic view. Although Lean and Agile budgeting may allocate funding to a value stream up front, we use the Lean Startup Cycle to continually evaluate the benefit hypothesis based on value delivered rather than on money or time spent. Consequently, the initial allocation of funds does not equate to the actual spending of funds; which is why the decision to pivot or preserve is a critically important financial decision.

Summary

Variability and risk are part of every large technology initiative. However, traditional financial metrics used to measure the value of those initiatives during development have not evolved to address the need for innovation and business agility. Innovation Accounting was developed as part of the Lean Startup Cycle to provide validated learning and reduce waste. Developing and measuring a Minimum Viable Product is used to validate the hypothesis, obtain results, and reduce risk before committing to the investment in the entire system. This fast feedback loop, based on objective data and actionable metrics, enables incremental learning. Focusing on the right leading indicators ensures we make the vital pivot or preserve decision. SAFe’s use of Innovation Accounting and the Lean Startup Cycle enables the enterprise to reduce waste and accelerate learning while enhancing business outcomes.

Learn More

[1] http://knowledge.wharton.upenn.edu/article/eric-ries-on-the-lean-startup/ [2] Ries, Eric. The Lean Startup: How Today’s Entrepreneurs Use Continuous Innovation to Create Radically Successful Businesses. The Crown Publishing Group, 2011.

Last update: 11 October 2021